2 mgsolipa Jan 15, 2014 12:22

Hi @mgsolipa

Thanks for your reply. I've followed every performance optimization procedure since a long time ago. Every Cache is on. I have Awstats and other statistic programs... but I can't see what is wrong with my domain except that, since updating to the latest b2evolution version, "visitors" have gone from 300 daily to 6000, the latter which I know is not true.

I know I don't have such a large number of visitors that make my domain have to be hosted in a VPS or higher type of account.

I really don't know what to do and I would appreciate some help.

www.inmotionhosting.com has suspended my account again even though I trimmed my b2evolution website to almost useless.

@gcasanova: do you have b2evolution's internal hit logging turned on?

If so, what do the Analytics pages say? Pay special attention to Ajax and Service requests.

You may be targeted by heavy spam attacks.

This quote from Inmotion shows very irregular activity on your site, which may be consistent with spam attacks:

-Hourly hits (13/Jan/2014)--------------------------------------------------------------------------

07: 689 08: 2154 09: 3336 10: 2349 11: 2529 12: 5453 13: 9049 14: 11517 15: 11401

16: 11765 17: 788 18: 710 19: 960 20: 650 21: 586 22: 20

After looking at it, you may turn hit loggin off in order to use less resources.

And of course disabled all plugins you don't need.

At some point you will definitely have outgrown what you can do with a shared hosting account though.

PS: you may also ask InMotion for more specific information about why they disabled your account? Do they have a limit on the number of SQL requests? Do they have a limit on CPU usage? What limit exactly did your site trigger? At what time and with how many requests?

This will help us determine if you just have more visitors (including spammers) than InMotion wants to handle on shared hosting or if someone hit some specific pages which went crazy on the server. (sometimes one single faulty plugin is enough).

Hi @fplanque:

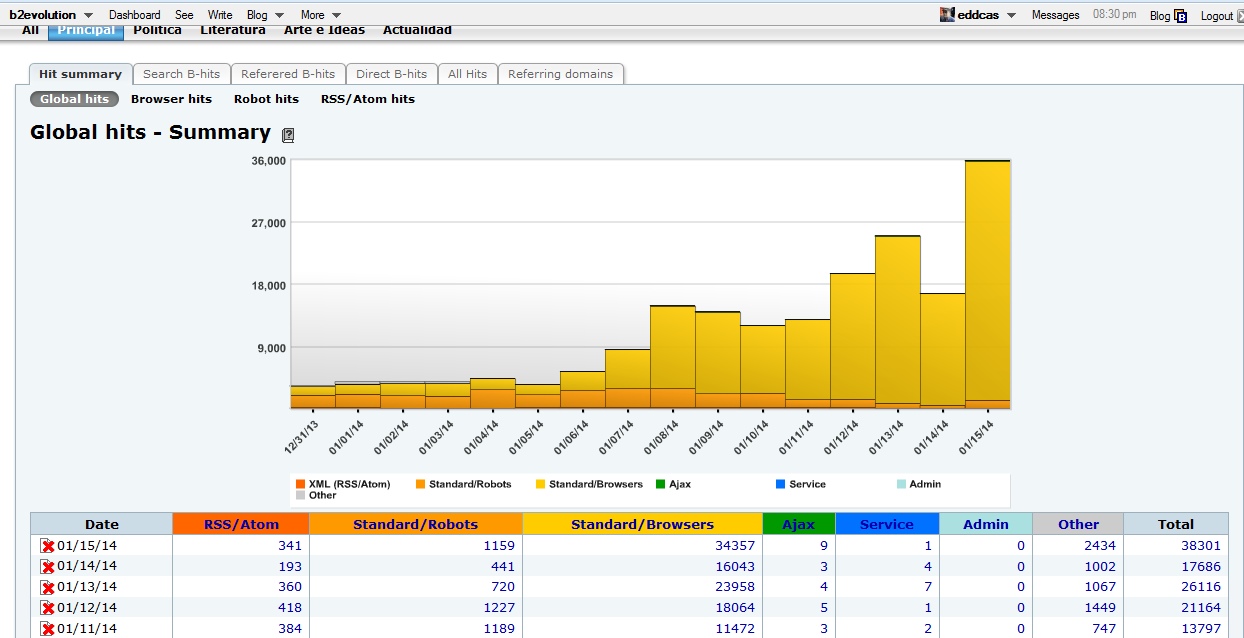

Take a look at the analytics image, it is quite suspicious how it has grown the last days. This seems to be some sort of spamming attack; it is not normal.

But how can I defend the site against whatever it is happening?

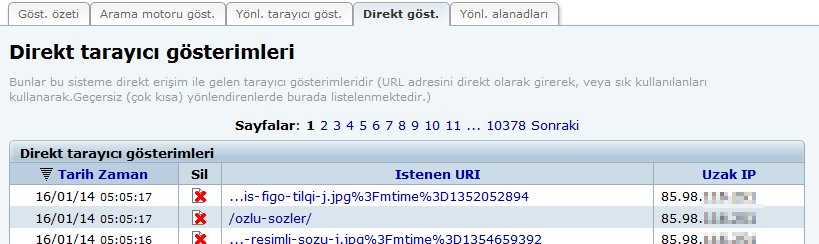

Well, you're very limited since you are on a shared hosting. But, off the top of my head; you can go to Direct-B hits, where it lists the request time & IP. Look for repeating IP's and check if the frequency is humanly possible. If you see something suspicious you can block the IP's.

@gcasanova can you also screenshot the "Browser hits" graph for further info about what's in the yellow bars?

However one of the following is probably the case:

- you got mentioned prominently on a site that sends you a lot of traffic

- you are getting spammed big time

I checked the direct B-hits and to me it doesn't look suspicious. I have in some cases like 10 or more hits from the same IP but I don't consider that as spam or an attack, what do you think?

Upon asking inmotionhosting for more information related to my domain's resource usage, this is what they replied:

You would need to check your B2Evolution installation to see if its internal hit logging is enabled. Your account had been disabled due to consuming a large amount of CPU usage. To view your account's CPU usage, you may use the Resource Usage graph, which is located in cPanel under the Logs section.

In order to get a better handle on your traffic, you may want to look into implementing Cloudflare on ...net. Cloudflare is a third-party service who specializes in analyzing and mitigating traffic to your domain by caching and serving your content from their servers. Below, I have included more information on Cloudflare's services.

http://www.inmotionhosting.com/support/team/setting-up-cloudflare

http://www.cloudflare.com/overview

Looking at your second graph, it seems the majority of your trafic is self-referred. Given the sudden increase, this is characteristic of crawlers scraping your site. It's not so much that someone is trying to spam you, it's more that someone is scraping all the pages from your site in order to reuse the content for a spammy site. Or maybe they are trying to find your comment forms but can't find them (because they use Ajax loading).

Scraping may occur from many different IPs simulatenously.

Cloudflare may be an effective solution depending on how you set it up.

For reference, can you please post the CPU usage graph inmotion is hinting you at, and globally all graphs they provide in their "Logs" session. This may help us reduce the load a little bit in case of scraper activity.

Also in this case you may turn off b2evo's hitlogging completely, just to decrease the CPU load, as explained in "extreme situations" here: http://b2evolution.net/man/advanced-topics/performance/performance-optimization

@gcasanova Note: Thank you for the screenshots. I also re-used them to create a Manual page for this: http://b2evolution.net/man/advanced-topics/fighting-spam/recognizing-a-spam-attack

Hello @gcasanova/@fplanque!

Thanks for the information and apologies for the problems concerning the resource-related account suspension. I recently commented on the "recognizing a spam attack" article that is listed above. I work with the InMotion Community Support Team and we are often tasked with hunting down issues like this above and beyond what our customers will get through our normal technical support team. We reviewed the case and found a few things. The earlier post about the bots hitting your site was one of the main issues. We re-affirmed that there are at least 2 bots hitting your pages. Interestingly, one of the people who reviewed your account is a former systems specialist who helped this same customer 2 years ago with a Yandex bot. He said that the same code used in preventing that can be used to help in this condition. You don't need to to specify the bot, but you can modify the existing code(in .htaccess) to be less specific and address the current bots.

I hope this helps to further clarify the issue. If we find any further information, I will update this post.

Here's a copy of the information in regards the issue that was addressed previously on this same account (by Jacob N.):

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(MJ12bot).*$ [NC]

RewriteRule .* - [R=403,L]

Thank you for taking further efforts help reduce your account's usage. I would also recommend blocking the (Yandex.com) search engine robot, unless you are targeting users in Russia as well. As if you are not then having them crawl and index your site is just going to be using up additional resources.

To accomplish this I've added the following rules to your following file:

/home/xxxxxxd8/public_html/xxxxxxxnova.net/.htaccess

ErrorDocument 503 "Site temporarily disabled for crawling"

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} Yandex [NC]

RewriteCond %{REQUEST_URI} !^/robots\.txt$

RewriteRule .* - [R=503,L]

Then to your [/home/xxxxxxrd8/public_html/xxxxxnova.net/robots.txt] file I added:

User-agent: Yandex

Disallow: /

This will temporarily 503 this bots requests (this tells them the server is busy and to try back later). Except if they request a (robots.txt) file, then it will let them read that file and see that the rule is telling them they are disallowed from crawling your site, this would help cut down on the total number of future requests. As after that point they should only be requesting your (robots.txt) file periodically to see if your rules have changed.

--------------------------------

Jacob also added this script to your site to let you see further information regarding user-agents hitting your site:

http://xxxxxxnova.net/botDetect.php

user= admin

password= pass

(NOTE: The website name has been obscured above for matters of privacy. You will need to use the proper URL in order to run script).

Thanks for your patience and your time!

Regards,

Arnel C.

InMotion Community Support Team

Thanks for your kind reply.

As you truly said Jacob N addressed and was very helpful some time ago when a similar issue happened. I thought that his code addition to the .htaccess would be sufficient to avoid future problems, but due to my lack of knowledge I was wrong.

Here is what is in my .htaccess, what would need to be added?

And again, thank you!

ErrorDocument 503 "Site temporarily disabled for crawling"

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} Yandex [NC]

RewriteCond %{REQUEST_URI} !^/robots\.txt$

RewriteRule .* - [R=503,L]

# Apache configuration for the blog folder

# Lines starting with # are considered as comments.

# PHP SECURITY:

# this will make register globals off in the evo directory

<IfModule mod_php4.c>

php_flag register_globals off

</IfModule>

<IfModule mod_php5.c>

php_flag register_globals off

</IfModule>

# PHP5

# This may need to be in each folder:

# AddHandler application/x-httpd-php5 .php

# CLEAN URLS:

# If you're using Apache 2, you may wish to try this if clean URLs don't work:

# AcceptPathInfo On

# DEFAULT DOCUMENT TO DISPLAY:

# this will select the default blog template to be displayed

# if the URL is just .../blogs/

<IfModule mod_dir.c>

DirectoryIndex index.php index.html

</IfModule>

# CATCH EVERYTHING INTO B2EVO:

# The following will allow you to have a blog running right off the site root,

# using index.php as a stub but not showing it in the URLs.

# This will add support for URLs like: http://example.com/2006/08/29/post-title

<IfModule mod_rewrite.c>

RewriteEngine On

# This line may be needed or not.

# enabling this would prevent running ina subdir like /blog/index.php

# This has been disabled in v 4.0.0-alpha. Please let us know if you find it needs to be enabled.

# RewriteBase /

# Redirect anything that's not an existing directory or file to index.php:

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^ index.php

</IfModule>

<Files 403.shtml>

order allow,deny

allow from all

</Files>

deny from 200.40.120.147

<IfModule mod_expires.c>

ExpiresActive On

ExpiresByType application/javascript "access plus 1 week"

ExpiresByType text/css "access plus 1 month"

ExpiresByType image/gif "access plus 1 month"

ExpiresByType image/png "access plus 1 month"

ExpiresByType image/jpeg "access plus 1 month"

# Now we have Expires: headers, ETags are unecessary and only slow things down:

FileETag None

</IfModule>

@gcasanova : I think Arnel already edited your .htaccess file.

How are your traffic graphs evolving?

@arncus : Thank you for your answers.

You seem to be blocking Yandex and Majestic robots through .htaccess -- which may be justified -- but I don't think this would solve the current problem. b2evolution already detects those robots when they identify themsemves (see /conf/_stats.php -> $user_agents = array( ... ) ) and identified robots should appear in light orange on the first graph shown above.

In this case I believe the site gets hit by robots that don't identify themselves as such (and probably don't care about robots.txt either). So they would probably need to be throttled differently.

Do your log files show more Yandex hits than what we detect?

Hello Fplanque,

Last evening I had taken lead on replying for the issue, but Jacob N. is actually the one making any edits or additions to the website at this point. When we looked at the traffic hitting the site, there were a lot of repetitive hits coming specifically from the Majestic bot. This is the reason for the suggestion to expand the code blocking Yandex to also include the Majestic bot. The logs do not indicate more Yandex hits as far as I know. Jacob will also be replying shortly with more information as he's delving further into the issue.

Thanks!

Arnel C.

@arncus ok thank you. I'll be waiting for Jacob's answer about whether or not the hit increase was from identifiable bots (like Majestic or Yandex) or if it was from sneaky non identified bots as I fear it is.

Note: I have added a little info on: http://b2evolution.net/man/advanced-topics/fighting-spam/recognizing-a-spam-attack

*Note for the future*: For b2evolution 5.1 (exact version may vary) we are planing to add the following features to the Analytics section:

This should help solving issues like this faster.

Hey @gcasanova !

Looks like I did help you with similar issues back when I was a system administrator all the way back in March of 2012.

At the time I was seeing a lot of usage from the Yandex bot, but this time around it looks like some more bots or possibly malicious or unwanted users have joined the party. As @fplanque pointed out, these don't appear to be bots passing legit User-Agents that label them as a bot in your stats.

@fplanque it doesn't look like @arncus or myself added anything extra to the .htaccess file yet.

I did take a look at the /conf/_stats.php file and do see where Yandex is included in the $user_agents array.

So I'm assuming that those requests should be represented by the Standard/Robots column, instead of Standard/Browsers which started to have the largest influx since 1/6.

We weren't really seeing any requests from Yandex this time around since their requests have been blocked for awhile now.

One of the things I've gone ahead and done for your account @gcasanova is to setup cPanel log archiving. That way we can see an entire days worth of logs historically. Unfortunately right now the only data I have to work with is from today between 07:33 and now, since that was when your access logs rotated.

Unfortunately on shared hosting you can't really parse this information too easily, but I'd be using the same steps mentioned in this guide for doing it on a VPS or dedicated server:

http://www.inmotionhosting.com/support/website/server-usage/parse-archived-raw-access-logs-from-cpanel

For the 9 hours of logs I do have to look at today, it appears that you've had 556 unique IP addresses request 5,524 non static resources (minus images, css, js files).

Pretty much all of these IPs were different CloudFlare IPs, so unfortunately you couldn't really pin-point one particular IP as being problematic. This is because that IP in your access logs isn't going to reflect the actual end user that requested the page.

A good example of this is the IP address 108.162.212.31 had 115 requests today. Here were the various User-Agents with those requests:

1 Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36

1 Mozilla/5.0 (Windows NT 6.1; Trident/7.0; rv:11.0) like Gecko

6 Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36

41 Mozilla/5.0 (X11; U; Linux i686; es-ES; rv:1.9.2.13) Gecko/20110412 Cunaguaro/3.6.13+14 (like Firefox/3.6.13+14)

66 Mozilla/5.0 (X11; U; Linux i686; es-AR; rv:1.9.0.11) Gecko/2009061212 Firefox/3.0.11 (Canaima GNU/Linux)Both of those User-Agents that start off with Mozilla/5.0 (X11; U; Linux i686; es seemed to be bot or malicious user related activity. Or possibly a bunch of people just happen to be using the exact same web browser, and are also getting assigned the same CloudFlare IP to make their request (pretty unlikely I'd think).

As you can see below, here are there requests per minute to your site:

Mozilla/5.0 (X11; U; Linux i686; es-AR

12 07:58

12 07:59

6 08:01

12 08:12

1 08:13

11 08:16

5 08:24

7 08:25

1 POST /htsrv/anon_async.php

5 GET /index.php/los-grandes-educadores-de-venezuela

60 GET /index.php

Mozilla/5.0 (X11; U; Linux i686; es-ES

15 08:02

1 08:03

23 08:05

1 08:12

1 08:19

7 GET /index.php/los-grandes-educadores-de-venezuela

34 GET /index.phpOk taking all that into account, I'm not gonna edit what all I already wrote above.... but I also took a look at that particular page /index.php/los-grandes-educadores-de-venezuela they're hitting and think I stumbled upon an even greater issue for the usage that maybe you could shed some light on.

On that page there are 15 comments at the bottom of the page, each one has an image for an avatar of the user. Those however are not displaying. One of them linked to the URL /plugins/visiglyph_plugin/visiglyphs/bdb3896aff664027b57eb4a98b50b2bf.png

The /plugins/visiglyph_plugin directory doesn't exist on your site, so it should throw a 404 error saying that the file couldn't be found. But instead it's doing a 301 redirect and causing the index.php script to run again.

So it looks like possibly with that plugin missing, and something in your .htaccess rules that I've yet to locate, it's causing an excessive amount of internal requests for your PHP script to keep firing.

When I run top in batch mode:

http://www.inmotionhosting.com/support/website/server-usage/using-the-linux-top-command-in-batch-mode

This is what spawns on your account simply trying to directly request that .png image that doesn't exist, it fires off 4 separate PHP processes to handle the request:

15202 userna5 20 0 154m 14m 7088 R 34.4 0.0 0:00.07 /opt/php52/bin/php-cgi /home/userna5/public_html/****.net/index.php

15250 userna5 20 0 153m 13m 6840 R 28.3 0.0 0:00.07 /opt/php52/bin/php-cgi /home/userna5/public_html/****.net/index.php

15285 userna5 20 0 163m 24m 7400 R 74.1 0.1 0:00.13 /opt/php52/bin/php-cgi /home/userna5/public_html/****.net/index.php

15294 userna5 20 0 156m 16m 7088 R 34.6 0.0 0:00.06 /opt/php52/bin/php-cgi /home/userna5/public_html/****.net/index.phpWhen I hit the full URL /index.php/los-grandes-educadores-de-venezuela, 17 PHP processes spawn.

So I think this is ultimately definitely causing your problems of late. As I saw 1,915 requests to /plugins/visiglyph_plugin/ from the logs available.

I've gone ahead and implemented this rule for you in your .htaccess file to temporarily fix this problem for you till you can figure out why the plugin might be missing now:

RewriteCond %{REQUEST_URI} ^/plugins/visiglyph_plugin/ [NC]

RewriteRule .* - [R=503,L]These were the hits for that plugin so far today broken up hourly:

64 07:00

239 08:00

153 09:00

198 10:00

197 11:00

127 12:00

280 13:00

208 14:00

132 15:00

248 16:00

69 17:00So hopefully with those blocked now it should reduce your usage quite a bit. Unfortunately I believe these hits will still show up in your b2evolution stats though. So taking a closer look at re-adding that missing plugin, or deleting the comments on pages so they aren't trying to process the avatar images through your PHP script should totally resolve the problems for you.

Now that the archived logs are setup, I can also take a look after a few full days of access to see if any other trends pop up as obvious problems.

- Jacob

Hey again @gcasanova,

I took a look at your archived access logs for yesterday that I setup. Another request I saw that was causing some issues was for /E_GuestBook.asp.

This again was another file that didn't exist on your site. Because your site is on a Linux server, any .asp script won't be loaded, as those are Microsoft Active Server Pages requiring the IIS web-server or the Apache::ASP Perl project. I'm not sure if you use to have a Windows server, and this file existed at one point, or rather bots are just trying to find a way to spam you.

Instead of causing a 404 error it was forcing a redirect to your index.php script, which again was consuming a good amount of CPU time.

It looks like this is the bit in your .htaccess file causing any of these missing files to hit your index.php script:

# Redirect anything that's not an existing directory or file to index.php:

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^ index.phpI saw these requests in the logs yesterday that would have cost some CPU time doing this redirection:

42 POST /GuestSave.asp

55 GET /guestadd.asp

83 GET /E_GuestBook.aspI've continued to add onto your .htaccess file to also block .asp page requests going forward so they stop causing executions of your index.php script:

RewriteCond %{REQUEST_URI} ^/plugins/visiglyph_plugin/ [NC,OR]

RewriteCond %{REQUEST_URI} \.asp$

RewriteRule .* - [R=503,L]It definitely looked like bot activity, because this one User-Agent Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1;) hit those scripts 45 times in less than a minute.

Today so far there has been 19 requests for .asp scripts with 5 of them being blocked now in just the last few minutes from the .htaccess rules.

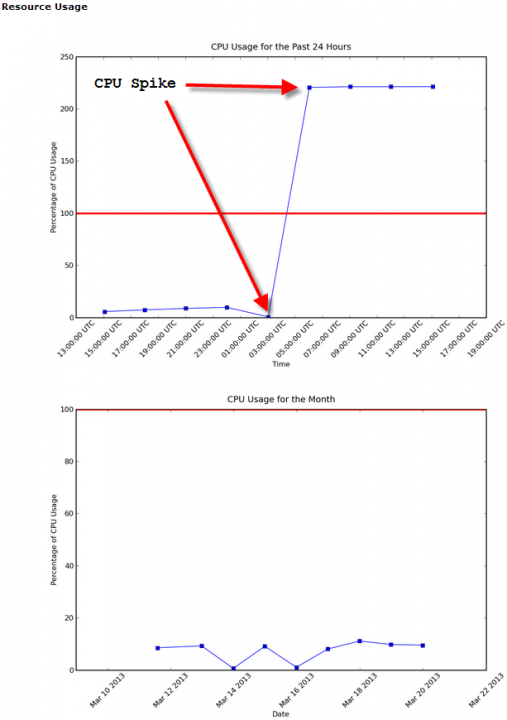

If you look at the Resource Usage tool in cPanel you should see your CPU usage took a nose dive yesterday. Hopefully with the bad avatar images and the .asp script requests blocked, it will drop down even more today.

- Jacob

Hi @jacob,

Thank you for your help.

So would you say the crux of the issue are bots hitting non existing asp scripts and non existing plugins which are then redirected to index.php which in turn generated high CPU load just by b2evolution generating the dynamic 404 pages? (On some versions of b2evo, 404s will run a search for similar results, is this the case here? -- we may need to rethink this behavior. )

Also, out of curiosity, can you post an anonymized screenshot of the kind of CPU usage increase graph that leads to suspending the account and let me know where users need to click to see it? (I'd like to show that to users who don't understand why they got suspended)

Thank you.

No problem @fplanque!

I'd definitely say the biggest issue was the missing plugin, when anyone visits a page with 15 comments it would spawn off 15 separate PHP requests back to index.php. Same thing for the non-existent ASP scripts. That dynamic 404 error handling through index.php was then intensified seemingly from what looked like bot activity based off the frequency of the requests.

Unfortunately in this case, CloudFlare requesting from their IP ranges left me only able to look at User-Agents in the logs on our server. So it's harder to tell if a bot is possibly spoofing a legitimate desktop browser agent, or if there just happen to be a lot of human requests thrown in as well.

I've attached a screen shot showing both the daily and monthly CPU usage graphs from within cPanel, you can see in this example that at around 3:00AM the usage suddenly jumped from around 5% to over 200%. An issue like that would be a sudden huge increase in traffic, or a runaway script.

When you have internal loops and possible bot activity, your usage would gradually climb throughout the day instead of spiking all at once like that. But the concept is the same, you want to remain under the red 100% CPU usage line. The occasional spike over this is fine, but when those spikes are happening very rapidly, or if they continue over multiple days this is what could lead to an account suspension.

Please note that these CPU graphs are not default to cPanel, and is something that us at InMotion Hosting added in to give customers a closer look at their shared account's usage:

http://www.inmotionhosting.com/support/website/server-usage/viewing-resource-usage-with-cpu-graphs

- Jacob

@jacob I don't know what the visiglyph plugin is supposed to do. What kind or URLs is it requesting? Is it requesting PNGs?

In order to mitigate as many such problems as possible in the future (.png requests, .asp requests, etc.) I am proposing to change b2evolution's default catch-all rule as following:

# Redirect any .htm .html or no-extension-file that's not an existing file or directory to index.php:

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^[^.]+(\.(html|htm))?$ index.php [L]

Do you think that would help for the future?

I'm very grateful to @fplanque, @arncus and especially @jacob

My opinion is that I simply wouldn't have known how to solve this problem by myself. Your help is invaluable and I hope with this issue new ideas come and are applied to future b2evolution versions.

This is what there is about Visiglyphs in b2evolution's forum:

http://forums.b2evolution.net/?s=visiglyph&disp=search&submit=Search

I have some questions:

With @jacob's changes to my .htaccess, is the bots problem solved?

@fplanque By what I learned today, uninstalling some plugins doesn't avoid them still executing or doing harm within b2evolution. How should this be dealt with?

Don't know if this question belongs here: Would being in a VPS avoided all this problems or simply a VPS would have had stronger resources that would withstand all abuse but not avoided it.

Again, thanks everybody for taking the time and effort to help. This is what I like about b2evolution and www.inmotionhosting.com

Yeah @fplanque all the visiglyph plugin requests that in turn were causing the redirect to index.php were all for .png images that seemed to be user avatars.

So those proposed .htaccess rules you've got for a default install, would definitely help curb CPU usage in similar scenarios. It looks like all the other requests are either directly for index.php or one of the other URLs like blog5.php, so I don't foresee those rules breaking anything.

That causes a normal 404 response when non-existent files are called, so you could then set a 404 error page using .htaccess:

http://www.inmotionhosting.com/support/website/how-to/setting-a-404-error-page-via-htaccess

@gcasanova no problem for the help, that's what we're here for! I went ahead and added these rules to your .htaccess file in place of the ones I posted further up in this discussion that was only blocking requests based on the visiglyph plugin or .asp file requests.

It does look like unfortunately when removing a plugin, if it's keeping links to its files in the main code base itself, then you have the behavior you had where you could have links to missing resources. The .htaccess rules implemented should now prevent that problem if you removed any further plugins I believe.

A VPS would have avoided this problem, as your cumulative CPU usage was the issue at hand, and it didn't get high enough to a point that would have warranted a suspension on the VPS platform. However the issues would have still existed there would have just been more CPU resources to throw at them.

Now that bad bots are being blocked via .htaccess rules, along with missing resources not causing additional .php calls, I think you should be fine on Shared going forward for the most part.

That of course could change if your traffic patterns change up again drastically, but now that raw access log archiving is enabled on your account, it should be easier to pinpoint problematic request patterns on your account.

- Jacob

Hello @gcasanova,

I make this questions to all the users that report any perfomance issue with their hosting provider.

Do you have any statistic about your visits? Google Analytics or any other tool is ok. It's kind of obvious, but every time I start by remembering that, if you have more visits, you will need more resources (unless the user directly report an attack in his posts). Is your hosting provider, or your current account, able to manage that traffic level? If you have already tracked this, and your site is just under attack, then the basic recomendations given at the other posts are the way to solve the problem.

Performance is one of the higher priorities for the development team, of course, as everything, always may be improvements on this field, but under regular conditions, the site should work with no problem. Here is some information about chache, and performance in general, that could be useful if you haven't read it before:

http://b2evolution.net/man/advanced-topics/performance/caching-and-cache-levels

http://b2evolution.net/man/advanced-topics/performance/performance-optimization

Best regards!